11am - 12 noon

Wednesday 26 June 2024

On the Interpretability of Reinforcement Learning

PhD Viva Open Presentation for Herman Yau

Hybrid event - All Welcome!

Free

University of Surrey

Guildford

Surrey

GU2 7XH

This event has passed

On the Interpretability of Reinforcement Learning

Abstract:

Reinforcement learning is a powerful tool to automate sequential decisions. Traditionally, reinforcement learning solutions were confined to small problem spaces with known optimal solutions. With the deep learning boom, there has been a trend to incorporate neural networks as a function approximator for the optimal policy leading to the desired outcome. Unfortunately, these decisions are black-box models which are hard to interpret, leaving users in vulnerable positions when such advice may in fact be harmful. This has led to a growing need for explanations to understand why an action is preferred by the agent in a given scenario.

This thesis attempts to bridge the gap between explainable AI and reinforcement learning. The goal is to generate human-friendly explanations for any reinforcement learning agent such that users can understand the notion behind decisions made. We achieve this by tracing the agent's learning experience to discover its intention.

We start by investigating the Bellman equation, which is the backbone of most reinforcement learning algorithms. We hypothesize that it is not possible to differentiate between intended outcomes based on value estimates alone. The first contribution of this thesis provides a formal proof of this ambiguity. We further show that the value function that evaluates the quality of an observation can be decomposed into visitations probability and reward components. These are used to introduced a novel form of explanations for reinforcement learning, namely intention-based explanations.

This first contribution works best in small discrete environments. It does not scale well in environments with large spaces since the space complexity increases exponentially. This is especially prominent in continuous environments, the total number of state-action combinations is intractable and therefore it is unfeasible to keep the state visitation count. The second contribution of this thesis tackles this problem by constraining the problem space in a semantically meaningful way. To achieve this, we took inspiration from conceptual explanations. By handcrafting meaningful and relevant concepts for a given environment, we effectively replace the enormous state space with a targeted conceptual explanation space which functionally acts similarly to the intention-based explanations in the first contribution. This drastically reduces resource usage and allows for an simple, human-friendly explanations.

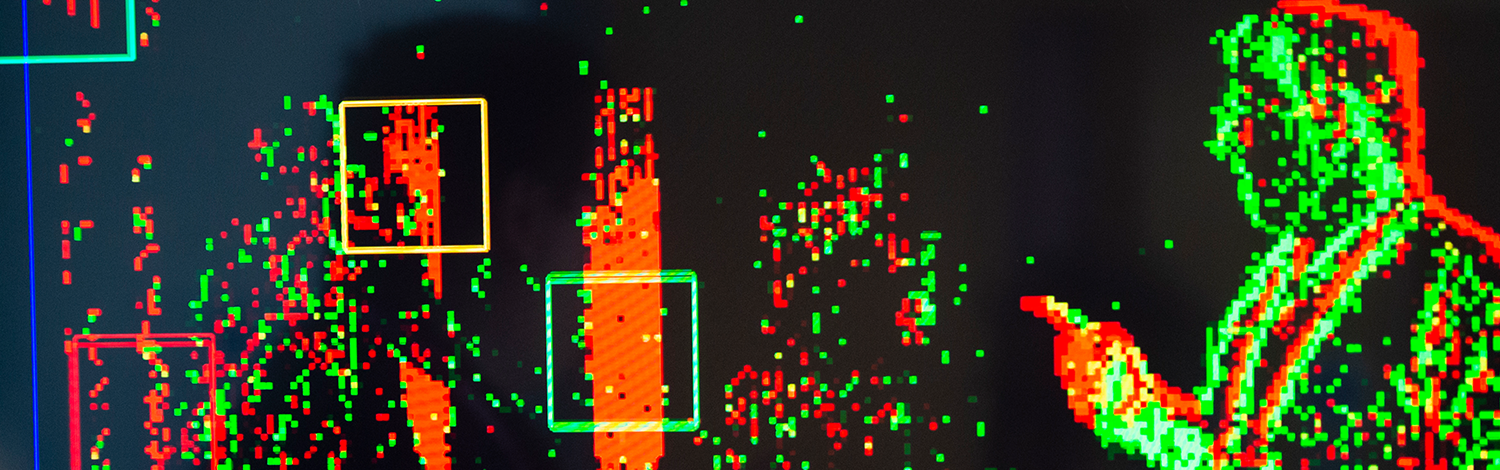

Unfortunately, the approach taken for the second contribution requires expert knowledge to select concepts which will produce logical explanations that make sense in a given environment. The final contribution takes this idea of conceptual explanations further by replacing the handcrafted concepts with an automated symmetry detection framework, which act as the concepts used for generating conceptual explanations. This allows us to automate concept selection regardless of choice of environment, which eliminates the need for external experts to label concepts.

We hope that the work conducted throughout this project would serve as a stepping stone to uncover agent intention, in particular incorporating concepts in the explanation space to promote human friendly agents for safety critical applications.