11am - 12 noon

Wednesday 13 September 2023

Vehicle state estimation for autonomous driving

PhD Viva Open Presentation by Nimet Kaygusuz.

All welcome!

Free

This event has passed

Abstract

Robust perception of the environment around a vehicle through onboard sensors is a major hurdle in the quest to realise autonomous driving. To ensure safety, accurate and robust systems are required that can operate within any environment.

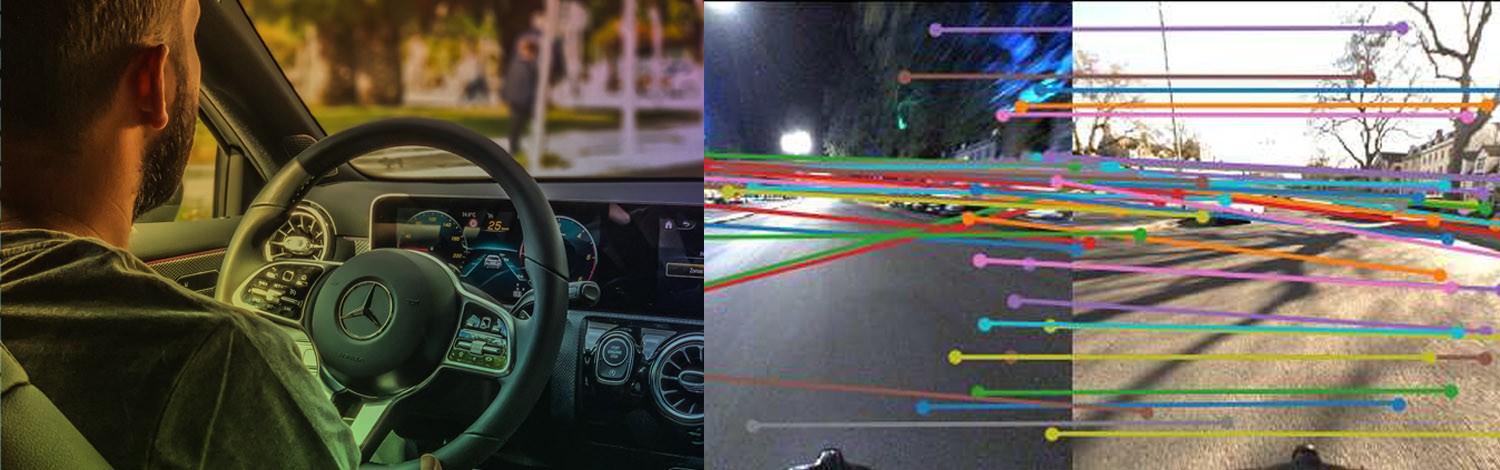

One of the most fundamental components of a moving agent is ego-motion estimation and Visual Odometry (VO) is a popular choice to achieve it. This is because cameras are a cost-effective solution and are often already installed on modern vehicles. Traditional approaches to VO have been studied for decades and typically work by detecting and matching hand-crafted features.

The main disadvantage of this is they are susceptible to failure in poor lighting and low-textured environments, where feature detection and matching tend to fail. In recent years, learning-based VO approaches have become popular and achieved competitive performance due to their ability to extract high-level representations without relying on hand-crafted features.

Despite encouraging results, there are several shortcomings that need to be addressed to deploy such motion estimation systems into real-life mobile agents. This thesis proposes novel architectures for learning-based VO and fusion techniques to address these drawbacks.

Uncertainty of the estimated motion provides a measure of its credibility and is often crucial for systems that depend upon motion output, such as path planning, vehicle state estimation, sensor fusion etc. Unfortunately, estimating uncertainty for VO is especially challenging as modelling the environmental factors, such as lighting, occlusions and weather, is often not feasible.

In the first contribution of this thesis, we propose a robust VO model to estimate 6-DoF poses and uncertainties from video. A learning-based algorithm, MDN-VO, is built which utilises a CNN-RNN hybrid model to learn feature representations from image sequences. A Mixture Density Network (MDN) is then employed to regress the camera motion as a mixture of Gaussians. We then employ a multi-camera approach to provide robustness to individual estimation failures and propose two multi-camera fusion techniques.

Any fusion approach must have the ability to balance incoming signals based on their uncertainties. In the second contribution of this thesis, we introduce a naive fusion approach, MC-FUSION, which learns to fuse multi-view motion estimations considering their uncertainties. MC-FUSION outperforms single-camera estimations and the state of the art, validating the benefits of a multi-view VO approach.

The approach expects estimations to be synchronised which is problematic for real-world applications. This is because acquiring images from multiple cameras at the same time requires ‘gen locked’ or synchronised hardware. Also, sufficient bandwidth is required to capture and process those images simultaneously which in turn has cost implications. Therefore, it is advantageous that a fusion system has the ability to fuse asynchronous signals.

In the third contribution of this thesis, we introduce AFT-VO, a novel transformer-based deep fusion framework. Our framework combines predictions from asynchronous multi-view cameras and accounts for the time discrepancies of measurements coming from different sources. It can fuse signals from any number of sources and, more importantly, it does not require those sources to be synchronised or to be at the same frequency.

We evaluate our model under severe driving scenarios, such as rain and night-time scenes, with promising results. However, the nature of VO is that small errors in the estimation of each frame accumulate over time which results in drift between estimated and real trajectories.

In the last contribution of this thesis, we employ a pose graph optimisation technique to reduce the VO drift.

Unlike other approaches, we utilise multi-view cameras to detect loops which gather information from different views and provide a more robust loop detection pipeline.

In this chapter, we also collect a large-scale multi-view dataset for the evaluation of VO and SLAM systems.

Finally, we conclude with thorough experiments demonstrating the drift in VO can be reduced via the optimisation pipeline.