Dr Avishkar Saha

About

My research project

Scene evolution modellingDeveloping methods for scene estimation within the context of autonomous vehicles, from mapping images into birds-eye-view to trajectory prediction.

Supervisors

Developing methods for scene estimation within the context of autonomous vehicles, from mapping images into birds-eye-view to trajectory prediction.

Publications

Graph convolutional networks (GCNs) enable end-to-end learning on graph structured data. However, many works assume a given graph structure. When the input graph is noisy or unavailable, one approach is to construct or learn a latent graph structure. These methods typically fix the choice of node degree for the entire graph, which is suboptimal. Instead, we propose a novel end-to-end differentiable graph generator which builds graph topologies where each node selects both its neighborhood and its size. Our module can be readily integrated into existing pipelines involving graph convolution operations, replacing the predetermined or existing adjacency matrix with one that is learned, and optimized, as part of the general objective. As such it is applicable to any GCN. We integrate our module into trajectory prediction, point cloud classification and node classification pipelines resulting in improved accuracy over other structure-learning methods across a wide range of datasets and GCN backbones. We will release the code.

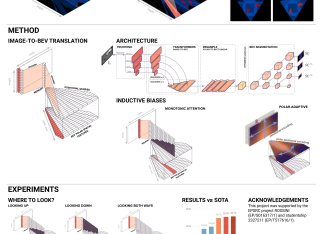

We approach instantaneous mapping, converting images to a top-down view of the world, as a translation problem. We show how a novel form of transformer network can be used to map from images and video directly to an overhead map or bird's-eye-view (BEV) of the world, in a single end-to-end network. We assume a 1-1 correspondence between a vertical scanline in the image, and rays passing through the camera location in an overhead map. This lets us formulate map generation from an image as a set of sequence-to-sequence translations. This constrained formulation , based upon a strong physical grounding of the problem, leads to a restricted transformer network that is convolutional in the horizontal direction only. The structure allows us to make efficient use of data when training, and obtains state-of-the-art results for instantaneous mapping of three large-scale datasets, including a 15% and 30% relative gain against existing best performing methods on the nuScenes and Argoverse datasets, respectively.

—The ability to produce large-scale maps for navigation , path planning and other tasks is a crucial step for autonomous agents, but has always been challenging. In this work, we introduce BEV-SLAM, a novel type of graph-based SLAM that aligns semantically-segmented Bird's Eye View (BEV) predictions from monocular cameras. We introduce a novel form of occlusion reasoning into BEV estimation and demonstrate its importance to aid spatial aggregation of BEV predictions. The result is a versatile SLAM system that can operate across arbitrary multi-camera configurations and can be seamlessly integrated with other sensors. We show that the use of multiple cameras significantly increases performance, and achieves lower relative error than high-performance GPS. The resulting system is able to create large, dense, globally-consistent world maps from monocular cameras mounted around an ego vehicle. The maps are metric and correctly-scaled, making them suitable for downstream navigation tasks.

Constructing Birds-Eye-View (BEV) maps from monocular images is typically a complex multi-stage process involving the separate vision tasks of ground plane estimation, road segmentation and 3D object detection. However, recent approaches have adopted end-to-end solutions which warp image-based features from the image-plane to BEV while implicitly taking account of camera geometry. In this work, we show how such instantaneous BEV estimation of a scene can be learnt, and a better state estimation of the world can be achieved by incorporating temporal information. Our model learns a representation from monocular video through factorised 3D convolutions and uses this to estimate a BEV occupancy grid of the final frame. We achieve state-of-the-art results for BEV estimation from monocular images, and establish a new benchmark for single-scene BEV estimation from monocular video.

Estimating a semantically segmented bird's-eye-view (BEV) map from a single image has become a popular technique for autonomous control and navigation. However, they show an increase in localization error with distance from the camera. While such an increase in error is entirely expected - localization is harder at distance - much of the drop in performance can be attributed to the cues used by current texture-based models, in particular, they make heavy use of object-ground intersections (such as shadows) [10]. which become increasingly sparse and uncertain for distant objects. In this work, we address these shortcomings in BEV-mapping by learning the spatial relationship between objects in a scene. We propose a graph neural network which predicts BEV objects from a monocular image by spatially reasoning about an object within the context of other objects. Our approach sets a new state-of-the-art in BEV estimation from monocular images across three large-scale datasets, including a 50% relative improvement for objects on nuScenes.

We approach instantaneous mapping, converting images to a top-down view of the world, as a translation problem. We show how a novel form of transformer network can be used to map from images and video directly to an overhead map or bird's-eye-view (BEV) of the world, in a single end-to-end network. We assume a 1-1 correspondence between a vertical scanline in the image, and rays passing through the camera location in an overhead map. This lets us formulate map generation from an image as a set of sequence-to-sequence translations. Posing the problem as translation allows the network to use the context of the image when interpreting the role of each pixel. This constrained formulation, based upon a strong physical grounding of the problem, leads to a restricted transformer network that is convolutional in the horizontal direction only. The structure allows us to make efficient use of data when training, and obtains state-of-the-art results for instantaneous mapping of three large-scale datasets, including a 15% and 30% relative gain against existing best performing methods on the nuScenes and Argoverse datasets, respectively.

Estimating a semantically segmented bird's-eye-view (BEV) map from a single image has become a popular technique for autonomous control and navigation. However, they show an increase in localization error with distance from the camera. While such an increase in error is entirely expected – localization is harder at distance – much of the drop in performance can be attributed to the cues used by current texture-based models, in particular, they make heavy use of object-ground intersections (such as shadows) [9], which become increasingly sparse and uncertain for distant objects. In this work, we address these shortcomings in BEV-mapping by learning the spatial relationship between objects in a scene. We propose a graph neural network which predicts BEV objects from a monocular image by spatially reasoning about an object within the context of other objects. Our approach sets a new state-of-the-art in BEV estimation from monocular images across three large-scale datasets, including a 50% relative improvement for objects on nuScenes.