11am - 12 noon

Wednesday 12 January 2022

Learning generic deep feature representations

PhD Viva Open Presentation by Mr Jaime Spencer Martin. All Welcome!

Free

This event has passed

Speakers

Learning Generic Deep Feature Representations

Abstract:

Feature representations are the backbone of computer vision. They allow us to summarize the overwhelming amount of visual information and distil it into its core components. Traditionally, features were hand-crafted to encode patterns that the researcher believed would be useful when solving a given task. Nowadays, with the advent of deep learning, the features have become part of the learning process. Unfortunately, most deep learning frameworks assume these features will be implicitly learnt as a by-product of the training process, resulting in features that only solve one task and cannot be easily reused. This slows down the development of solutions to new problems, as features must be re-learnt from scratch.

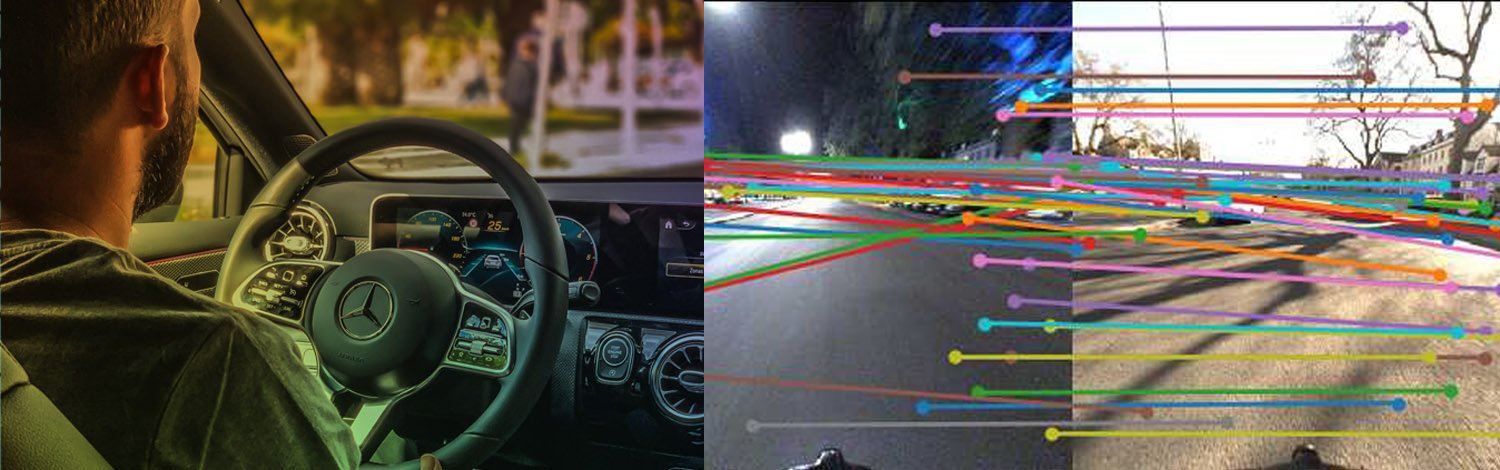

This thesis revisits the idea of dedicated feature learning as its own independent task in the age of deep learning. The aim is to learn representations containing useful properties that are effective across a range of different tasks. We achieve this by bridging the gap between explicit features used for geometric correspondence estimation and the implicit features used in deep learning frameworks for depth estimation, semantic segmentation, visual localization and more.

In the field of correspondence estimation, learned feature descriptors have recently started outperforming their hand-crafted counterparts. We make the observation that these approaches are simply learning a generic embedding that captures the (dis)similarity between different points. We argue that this goal is also shared by the implicit feature learning step of nearly all computer vision algorithms. The first contribution of this thesis proposes a generic dense feature learning approach based on this observation. We further show how the selection of negatives used to train the network affects the properties of the learnt features and downstream tasks. To this end, we introduce the concept of spatial negative mining, where negative samples are drawn based on their geometric relationship to the original positive correspondence.

As with other feature learning approaches, our first contribution requires pixel-wise ground truth correspondences---obtained via LiDAR or SfM data---during training. Obtaining this data can be challenging, especially if the images come from complex cross-seasonal environments with wide temporal or spatial baselines. The second and third contributions aim to learn features robust to the drastic changes in appearance caused by challenging weather and seasonal conditions. To achieve this we developed two self-supervised techniques that do not require ground truth annotations, but still show the network real-world data. The first achieves this by replacing strong spatial constraints with a global statistical criterion. This assumes each feature descriptor should only match well with a single feature in the other image. The third contribution re-incorporates within-season spatial constraints by simultaneously learning dense monocular depth, visual odometry and dense feature descriptors in a self-supervised manner.

Whilst the features presented above are generic, they typically need to be finetuned on a task to maximize their performance. Unfortunately, this process removes their generality. To solve this we turn to multi-task learning, where the objective is to learn features that perform well on the given set of training tasks. The final contribution of this thesis shows how spatial attention allows us to learn a generic feature space from which multiple downstream tasks can select pertinent features. This allows us to effectively exploit the relationships between multiple tasks and easily adapt to new ones, resulting in better performance and a significantly reduced resource usage.