Dr Sara Atito Ali Ahmed

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), Faculty of Engineering and Physical Sciences.About

Biography

Sara Ahmed holds a joint position at the University of Surrey between the Centre for Vision, Speech and Signal Processing (CVSSP) and the Institute of People-Centred Artificial Intelligence. Prior to her current role, Sara completed her PhD in computer science at Sabanci University, where she specialised in deep learning ensembles for image understanding. Currently, her primary focus is on advancing the field of self-supervised learning for vision. Her innovative work in this area has led to her recognition as a Surrey Future Fellow - a prestigious recognition of her outstanding early career achievements. Additionally, her interdisciplinary work and commitment to addressing global issues have earned her a fellowship at the Institute for Sustainability, underscoring her impact in both computer science and broader real-world challenges.

Sustainable development goals

My research interests are related to the following:

Publications

Contrastive learning has achieved great success in skeleton-based action recognition. However, most existing approaches encode the skeleton sequences as entangled spatiotemporal representations and confine the contrasts to the same level of representation. Instead, this paper introduces a novel contrastive learning framework, namely Spatiotemporal Clues Disentanglement Network (SCD-Net). Specifically, we integrate the decoupling module with a feature extractor to derive explicit clues from spatial and temporal domains respectively. As for the training of SCD-Net, with a constructed global anchor, we encourage the interaction between the anchor and extracted clues. Further, we propose a new masking strategy with structural constraints to strengthen the contextual associations, leveraging the latest development from masked image modelling into the proposed SCD-Net. We conduct extensive evaluations on the NTU-RGB+D (60&120) and PKU-MMD (I&II) datasets, covering various downstream tasks such as action recognition, action retrieval, transfer learning, and semi-supervised learning. The experimental results demonstrate the effectiveness of our method, which outperforms the existing state-of-the-art (SOTA) approaches significantly.

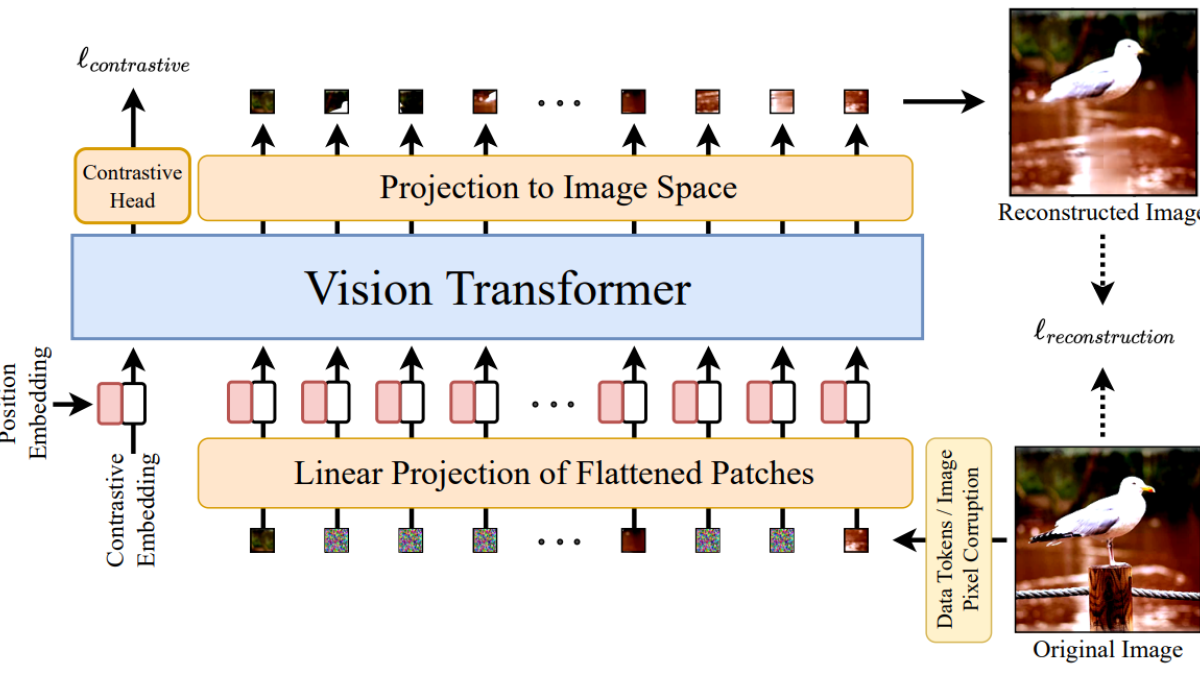

Self-supervised pretraining (SSP) has emerged as a popular technique in machine learning, enabling the extraction of meaningful feature representations without labelled data. In the realm of computer vision, pretrained vision transformers (ViTs) have played a pivotal role in advancing transfer learning. Nonetheless, the escalating cost of finetuning these large models has posed a challenge due to the explosion of model size. This study endeavours to evaluate the effectiveness of pure self-supervised learning (SSL) techniques in computer vision tasks, obviating the need for finetuning, with the intention of emulating human-like capabilities in generalisation and recognition of unseen objects. To this end, we propose an evaluation protocol for zero-shot segmentation based on a prompting patch. Given a point on the target object as a prompt, the algorithm calculates the similarity map between the selected patch and other patches, upon that, a simple thresholding is applied to segment the target. Another evaluation is intra-object and inter-object similarity to gauge discriminatory ability of SSP ViTs. Insights from zero-shot segmentation from prompting and discriminatory abilities of SSP led to the design of a simple SSP approach, termed MMC. This approaches combines Masked image modelling for encouraging similarity of local features, Momentum based self-distillation for transferring semantics from global to local features, and global Contrast for promoting semantics of global features, to enhance discriminative representations of SSP ViTs. Consequently, our proposed method significantly reduces the overlap of intra-object and inter-object similarities, thereby facilitating effective object segmentation within an image. Our experiments reveal that MMC delivers top-tier results in zero-shot semantic segmentation across various datasets.

Transformers, which were originally developed for natural language processing, have recently generated significant interest in the computer vision and audio communities due to their flexibility in learning long-range relationships. Constrained by the data hungry nature of transformers and the limited amount of labelled data, most transformer-based models for audio tasks are finetuned from ImageNet pretrained models, despite the huge gap between the domain of natural images and audio. This has motivated the research in self-supervised pretraining of audio transformers, which reduces the dependency on large amounts of labeled data and focuses on extracting concise representations of audio spectrograms. In this paper, we propose L ocal- G lobal A udio S pectrogram v I sion T ransformer, namely ASiT, a novel self-supervised learning framework that captures local and global contextual information by employing group masked model learning and self-distillation. We evaluate our pretrained models on both audio and speech classification tasks, including audio event classification, keyword spotting, and speaker identification. We further conduct comprehensive ablation studies, including evaluations of different pretraining strategies. The proposed ASiT framework significantly boosts the performance on all tasks and sets a new state-of-the-art performance in five audio and speech classification tasks, outperforming recent methods, including the approaches that use additional datasets for pretraining.

Recently, masked image modeling (MIM), an important self-supervised learning (SSL) method, has drawn attention for its effectiveness in learning data representation from unlabeled data. Numerous studies underscore the advantages of MIM, highlighting how models pretrained on extensive datasets can enhance the performance of downstream tasks. However, the high computational demands of pretraining pose significant challenges, particularly within academic environments, thereby impeding the SSL research progress. In this study, we propose efficient training recipes for MIM based SSL that focuses on mitigating data loading bottlenecks and employing progressive training techniques and other tricks to closely maintain pretraining performance. Our library enables the training of a MAE-Base/16 model on the ImageNet 1K dataset for 800 epochs within just 18 hours, using a single machine equipped with 8 A100 GPUs. By achieving speed gains of up to 5.8 times, this work not only demonstrates the feasibility of conducting high-efficiency SSL training but also paves the way for broader accessibility and promotes advancement in SSL research particularly for prototyping and initial testing of SSL ideas.

Deep neural networks have enhanced the performance of decision making systems in many applications, including image understanding, and further gains can be achieved by constructing ensembles. However, designing an ensemble of deep networks is often not very beneficial since the time needed to train the networks is generally very high or the performance gain obtained is not very significant. In this paper, we analyse an error correcting output coding (ECOC) framework for constructing ensembles of deep networks and propose different design strategies to address the accuracy-complexity trade-off. We carry out an extensive comparative study between the introduced ECOC designs and the state-of-the-art ensemble techniques such as ensemble averaging and gradient boosting decision trees. Furthermore, we propose a fusion technique, that is shown to achieve the highest classification performance.