Ahmed Bourouis

Academic and research departments

Centre for Vision, Speech and Signal Processing (CVSSP), Surrey Institute for People-Centred Artificial Intelligence (PAI).About

My research project

Sketch Abstraction for Human Cognitive process AnalysisMy research is focused on using generative models to enable people more control and creativity with visual content.

Before starting my PhD, I completed my MSc in AI at the University of Paris Saclay, where I worked on several projects including 3D reconstruction, and molecular dynamics simulations modeling.

Supervisors

My research is focused on using generative models to enable people more control and creativity with visual content.

Before starting my PhD, I completed my MSc in AI at the University of Paris Saclay, where I worked on several projects including 3D reconstruction, and molecular dynamics simulations modeling.

Publications

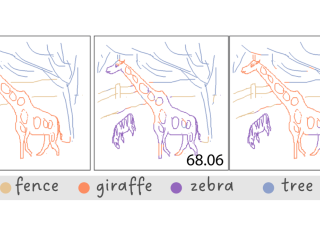

This dataset contains training, validation and test data used in the paper: "Open Vocabulary Scene Sketch Semantic Understanding" by Ahmed Bourouis, Judith Ellen Fan, Yulia Gryaditskaya, CVPR, 2024.It contains our split of the sketches from the FSCOCO dataset into training, validation and test sets. For the validation and test sets, we provide stroke-level annotations into different categories, as shown in the images above. The details are provided below.

We study the underexplored but fundamental vision problem of machine understanding of abstract freehand scene sketches. We introduce a sketch encoder that results in semantically-aware feature space which we evaluate by testing its performance on a semantic sketch segmentation task. To train our model we rely only on the availability of bitmap sketches with their brief captions and do not require any pixel-level annotations. To obtain generalization to a large set of sketches and categories we build on a vision transformer encoder pretrained with the CLIP model. We freeze the text encoder and perform visual-prompt tuning of the visual encoder branch while introducing a set of critical modifications. Firstly we augment the classical key-query (k-q) self-attention blocks with value-value (v-v) self-attention blocks. Central to our model is a two-level hierarchical network design that enables efficient semantic disentanglement: The first level ensures holistic scene sketch encoding and the second level focuses on individual categories. We then in the second level of the hierarchy introduce a cross-attention between textual and visual branches. Our method outperforms zero-shot CLIP pixel accuracy of segmentation results by 37 points reaching an accuracy of 85.5% on the FS-COCO sketch dataset. Finally we conduct a user study that allows us to identify further improvements needed over our method to reconcile machine and human understanding of scene sketches.